Social Media’s Legal Shield Is Built on a Lie

Understanding Section 230 In Reality, Not Prevailing Narrative

For decades, social media’s legal shield has protected platforms like Twitter, Facebook, and Truth Social. They have enjoyed the broad protections of Section 230 of the Communications Decency Act, but there’s one glaring problem: they don’t actually qualify for those protections under the law as it’s written.

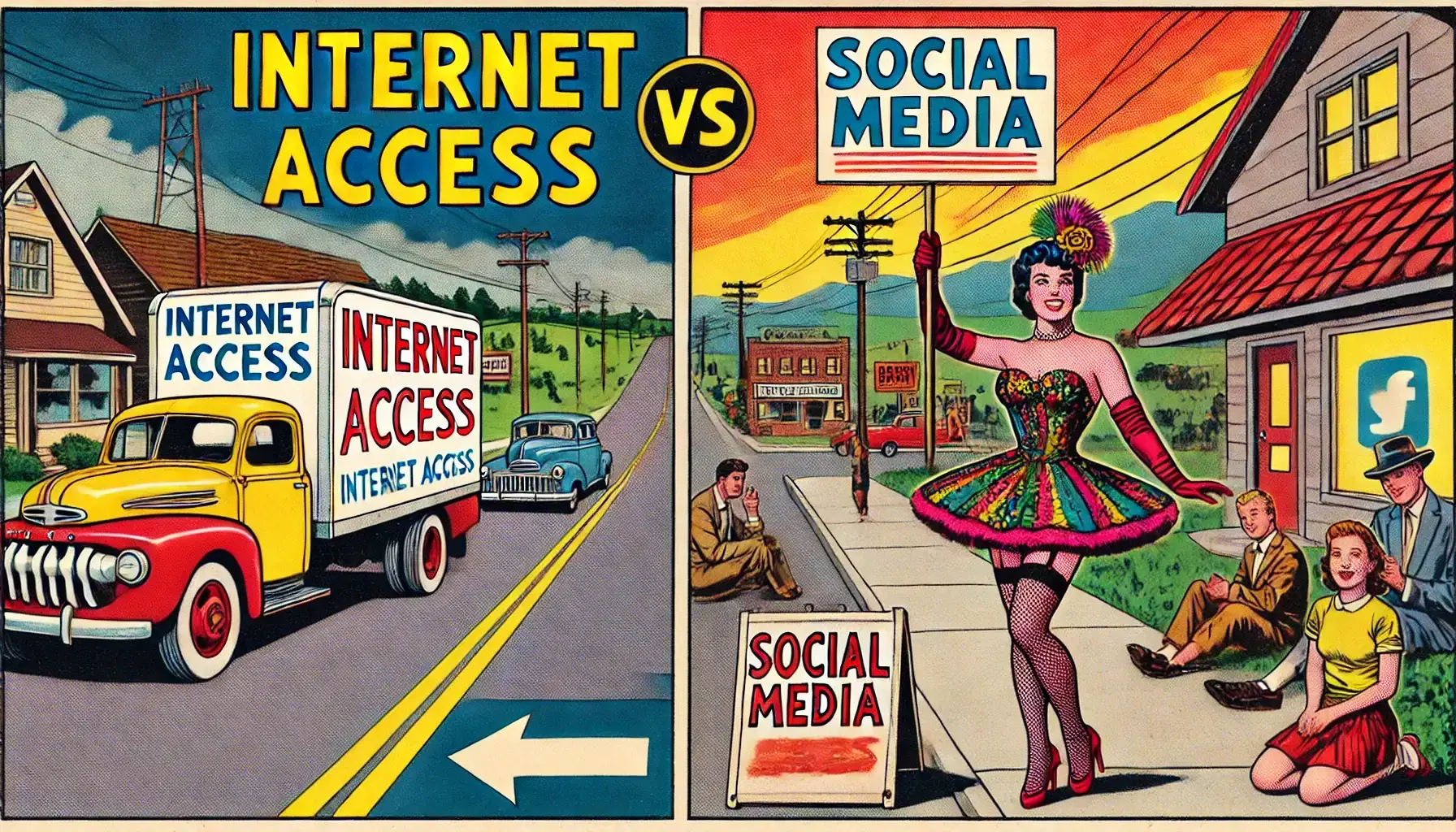

Section 230 was drafted in 1996, long before the advent of modern social media, to protect passive intermediaries such as internet service providers (ISPs), email providers, and bulletin board systems (BBS). These entities provide neutral infrastructure for users to communicate without moderating, curating, or amplifying content. Social media companies, on the other hand, operate actively—publishing, curating, broadcasting, and profiting from user content. This distinction places them firmly in the realm of publishers, making them liable for the content they host, amplify, and profit from.

It’s time to end the legal fiction that social media is a neutral intermediary deserving of blanket immunity. Here’s why.

What Section 230 Protects—and What It Doesn’t

Protected: Passive Infrastructure Providers

Section 230 defines “interactive computer services” as entities that provide access to the internet or computer networks. For example:

- Internet Service Providers (ISPs)

Companies like Comcast facilitate access to the internet, enabling users to send emails, visit websites, and engage in lawful online activities. Their role is inherently passive—they do not monitor or curate content, only provide the technical infrastructure. - Telephone Networks

Comcast’s provision of telephone services ensures that users can connect with any other phone. Just as the telephone company isn’t responsible for illegal activities discussed during a call, ISPs are not liable for the lawful or unlawful activities conducted over their networks.- Key Protections Under Section 230:

- ISPs and telephone networks cannot be held liable for user actions facilitated by their neutral services.

- Privacy protections ensure these providers cannot unlawfully spy on users or provide data as evidence in court without due process.

- Key Protections Under Section 230:

Not Protected: Active Publishers

Social media platforms, by contrast, are not passive facilitators of communication. They operate as active publishers:

- Facebook’s “Wall” or Twitter’s Feed

- These features curate content, promote certain posts, and suppress others, making platforms active participants in the distribution of information.

- Corporate and Owner Participation

- Platforms like Facebook publish their own content on their platforms, and platform owners, such as Elon Musk or Donald Trump, use these spaces to actively broadcast their own messages.

- Algorithmic Amplification

- Social media platforms deploy algorithms to promote content that maximizes user engagement, often at the expense of truth or safety.

- Key Distinction: Social media platforms are more like a channel on the internet than “the internet” itself. If Comcast’s cable network allowed a channel to broadcast child pornography, it would face immediate liability—and rightly so. Social media platforms must be held to the same standard.

The Fragmented Reality of Social Media

Social media platforms are not a single entity but an amalgamation of functionalities, each raising unique legal and ethical challenges. These include:

- Public Blogs

- Platforms like Twitter and Facebook essentially act as blogging tools where users post publicly visible content.

- Message Boards

- Social media functions as a digital message board, enabling community-driven discussions. However, these boards require moderation and curation, making platforms more akin to publishers.

- Direct Messaging

- Private communication features mirror email or texting but raise privacy concerns under surveillance laws like the Patriot Act.

- Broadcasting

- Livestreaming and public posts turn platforms into broadcasting tools with wide reach.

- Advertising

- Social media derives much of its revenue from advertising, amplifying and pairing ads with user content in ways that directly involve the platform in content dissemination.

- File Sharing

- Platforms host and distribute user-uploaded files, such as videos or images, making them complicit in illegal or harmful content if they fail to moderate it.

The Message Board Problem: Social Media’s Core Value

The most valuable aspect of social media is its role as a digital message board, where users post and interact with shared content. However, message boards inherently require moderation, which undermines any claim to neutrality. By curating and amplifying posts, social media companies act as publishers and should be held liable for the harm caused by their editorial decisions.

The lack of a clear legal definition for social media platforms makes it nearly impossible to litigate against them effectively. Courts cannot consistently address liability when platforms operate simultaneously as blogs, message boards, broadcasters, and advertising networks.

The Patriot Trap and the Illusion of Power

The public has been misled into believing that social media platforms are the ultimate public square for exercising 1st Amendment rights. Meanwhile, laws like the Patriot Act have created an environment of surveillance and self-censorship, discouraging the use of more protected communication methods like email and telephone calls. In the end, this is more of a Patriot Trap for people, becoming too afraid to even think for themselves. Going against the federal government has been branded as impossible.

Social media’s inflated role in public discourse has created a chilling effect, making users believe their protests and free speech are fully protected on platforms that regularly censor content and manipulate engagement for profit.

Calls to repeal Section 230 are often masked as efforts to protect free speech, but they could unintentionally undermine the privacy and integrity of telephonic and email communication—the very services Section 230 was designed to protect.

A Shopping Mall of Horrors: The True Role of Social Media

Imagine a shopping mall where crimes such as child exploitation are recorded and distributed, and the mall owner:

- Deletes evidence to protect their reputation, or

- Profits from people sharing and engaging with the content.

This is the reality of social media today. Platforms profit from harmful content under the guise of neutrality, even as they moderate and curate content for financial gain. If a shopping mall operator acted this way, they’d face immediate criminal charges. Social media platforms must be held to the same standard.

Reforming Social Media for Accountability

Social media companies must fundamentally reformat their business models to ensure harmful content cannot proliferate. This may involve:

- Radical Content Moderation

- Platforms must adopt systems that prevent illegal or harmful content from appearing or spreading, no matter the cost to engagement metrics.

- Adapting to Lower Valuations

- If these changes reduce Facebook’s valuation from over $1 trillion to $10 billion, so be it. The public and regulators must not prioritize protecting the inflated valuations of companies whose harm outweighs their value.

- Redefining Their Role

- Social media companies must stop pretending to be neutral intermediaries and accept their role as publishers with clear responsibilities.

Accountability Over Valuation

Social media companies like Facebook and Twitter are not “the internet.” They are publishers, broadcasters, and curators of content, and their actions have real consequences. Section 230 was never meant to protect businesses that profit from harm while avoiding accountability. It’s time for Congress and the courts to end this legal fiction and demand real reform.

Accountability isn’t just a legal necessity—it’s a moral imperative for a just and transparent digital future.